Genomic Intelligence for Precision Medicine Drug Development

Empower your Team with Genetic Intel to Propel Your Drug Programs Forward

Precision Medicine is Rooted in Genomics

When you’re developing therapeutics for rare disease and cancers with complex genetic drivers, you need a solid foundation of genomic evidence.

Every paper, every pathogenic variant, and every patient may yield precious information.

Partner with Genomenon to get the genetic intel you need to support evidence-based decisions at every phase of your drug program–and free your team to focus on other priorities.

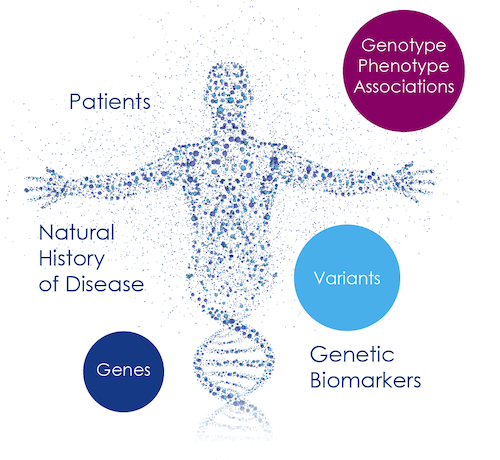

Genetic Intelligence can reveal invaluable information about genotype:phenotype associations, the natural history of disease, genetic biomarkers, and disease pathways that might otherwise be lost in the scientific literature and disparate public data resources.

Aggregating, integrating, and extracting evidence from genomic data is hard

Genetics is complex. High-throughput technologies have transformed how we research the roles of genes and other biomolecules in the context of disease. But the sheer volume of data being produced also complicates the process of identifying relevant genetic disease information and actionable insights.

To further complicate matters, gene name errors and synonyms as well as inconsistencies in naming variants makes curating genomic evidence from the literature incredibly challenging.

Time Consuming & Costly

Aggregating and curating genomic data can take months if not years – and cost nearly $500K USD or more in labor and missed opportunity costs

Difficult to Manage

Extracting actionable evidence from disparate sources requires training in ACMG nomenclature and data integration processes

Prone to Error & Gaps

Missing gene data is all too common because 76% of variant mentions are in the wrong format (43% of known causative variants are missed)

Reinforce the Foundation of your Precision Medicine Program with Genomic Intelligence

Put Genomenon to Work for You

If you’re grappling with the Herculean task of aggregating genomic data for your rare disease or personalized cancer medicine program, don’t do it alone. Talk to Genomenon.

With Genomenon Genomic Intelligence Services, you gain access to our extraordinary team of highly trained “gene detectives”, who use our proven processes and AI technology to curate and continually update our Mastermind® human exome knowledgebase.

Unlock Disease Biology

Identify targets and biomarkers, optimize clinical trial inclusion data, prepare for regulatory submissions quickly and efficiently

Reveal Genetic Drivers

Jumpstart your program with a firm foundation of genomic evidence for disease mechanisms and natural history studies

Gain Diagnostic Insights

Assess global trends in clinical diagnostic lab searches in Mastermind and raise awareness of your therapeutics and trials

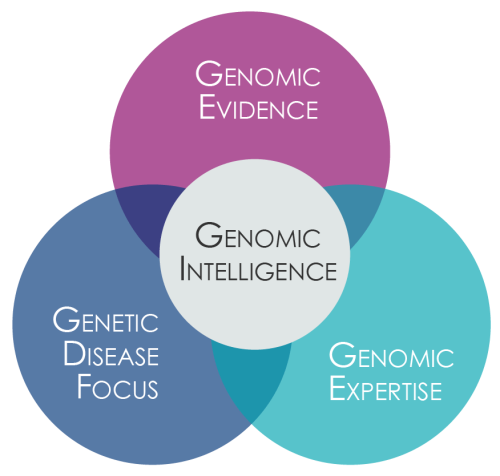

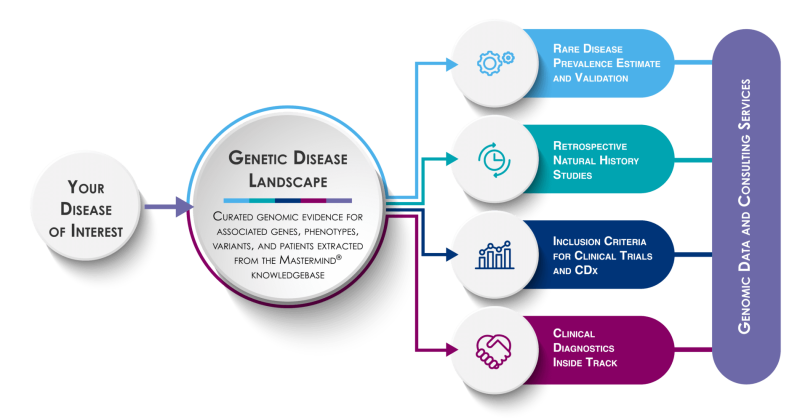

Elements of Genomenon Genomic Intelligence

What sets Genomenon professional services apart from biomedical data curation? We specialize in the mining, analysis, and interpretation of genetic evidence for rare genetic diseases and cancer. Through our Genomic Intelligence solutions, you gain access to our:

AI-Powered Genomic Evidence Platform. Reveal actionable insights from relevant genetic data through our exhaustive Mastermind® knowlegebase and genomic search engine

Genomic Intelligence Curation Pros. Identify the precise genomic evidence you need with a tech-enabled team laser-focused on rare genetic diseases and cancers

Processes optimized for Genetic Disease. Leverage the same well-honed curation processes and time-saving AI technology we’ve developed to curate the human exome in record time.

Expertly Curated Genomic Evidence, Tailored to Fit your Program

Our Genomic Intelligence workflow ensures you don’t miss critical evidence

We start by listening to you to understand exactly what you need, then we generate a deep genetic disease landscape to identify all the genomic information associated with your disease of interest. We curate, validate, and distill this wealth of information to deliver the precise genetic data you need.

Rare Perspectives Roundtable Series

The End of VUS? Impact on Diagnosis and Treatment of Rare Diseases – Webinar with Pharming & Ultragenyx

Discover Genetic Biomarkers and Disease Mechanisms

Mastermind for Genomic Evidence Mining

• Simplify and speed up literature search and database compilation

• Identify and access full-text papers and genomic evidence

• Free your team to focus on drug development, not variant search terminology

Rely on one AI platform for genomic data mining tasks

Standardized Locus-Specific Databases

• Save time with fully curated and richly annotated LSDBs

• Gain an understanding of genotype:phenotype associations

• Collaborate with us to expertly curate your disease genes of interest

Leverage ready-to-analyze variant datasets

Rare Disease Prevalence Estimation and Validation

• Rely on more accurate protocols and data for calculating genetic birth prevalence

• Get the up-to-date population data and evidence for rare autosomal recessive diseases

Assess Market Potential for Orphan Drugs

Investigate Disease at the Patient Level

Retrospective Natural History Studies

• Get unbiased, evidence-based insights to rapidly assess your disease market

• Validate rare genetic disease prevalence estimates with up-to-date supporting evidence and population data

Define disease phenotypes and enroll the right patients

Inclusion Criteria for Clinical Trials and CDx

• Quickly identify endpoints backed by evidence to inform prospective NHS and clinical trial design

• Expand disease understanding at the patient level

Optimize Patient Profiles for Clinical Trials

Global Insights into Clinical Diagnostic Trends

• Increase awareness of your clinical trials at the point of diagnosis

• Get actionable market insights with curated Mastermind users data representing genetic testing labs around the world

Increase diagnosis of genetic disease patients

Discover how you can supercharge your genetic disease and cancer drug development with genomic intelligence.

Genomic Intelligence in Action

Dr. Susanne Rhoades of Loxo@Lilly discusses challenges her team encountered while working to get selpercatinib and the Oncomine™ Dx Target Test (ODxTT) approved as an Rx/CDx for thyroid cancer in Japan. She describes how she and her team partnered with Genomenon to respond to requests from the Japanese regulatory agency.

Genomenon collaborated with KOLs at NHGRI and Muenster University Childrens’ Hospital to estimate ENPP1 Deficiency Prevalence. This Orphanet Journal of Rare Diseases paper describes how we applied a comprehensive literature review and updated population data to reveal this disease is more than 3x more prevalent than previously found.

Genomenon CSO Dr. Mark Kiel joins Dr. Gus Khursigara, VP of Medical Affairs and Clinical Strategy at Inozyme Pharma to discuss a computational approach to curating genomic evidence is an efficient and economical method to systematically identify and analyze all reported cases of a rare disease and interpret associated genetic variants.

We also encourage you to check out:

- Sarafrazi et al. (2021) Novel PHEX gene locus-specific database: Comprehensive characterization of vast number of variants associated with X-linked hypophosphatemia (XLH). Human Mutation, 43, 143-157. Read this paper to learn about how we partnered with Alexion to compile a PHEX LSDB and findings derived from this work.

- Rare Perspectives: It Takes a Village: Developing Treatments for Rare Disease. Watch this webinar to hear rare disease experts discuss challenges of orphan drug development and opportunities for the rare disease community to work together toward life-saving treatments